Nvidia’s meteoric rise to a $4 trillion market capitalization has been fueled by its dominance in AI chips, the foundational engines powering large language models and generative AI systems. But while much of the attention remains fixed on the company’s graphics processing units (GPUs), Nvidia is quietly making moves to ensure it stays indispensable to the next wave of computing—regardless of whether massive “AI mega-campuses” materialize as envisioned.

Nvidia’s Strategic Shift: From Chips to Systems

At the heart of this strategy is networking infrastructure, often referred to as the “plumbing” of the modern data center. For years, Nvidia’s competitive edge was its unrivaled GPUs, which trained and ran AI models at unprecedented scale. But as demand for AI infrastructure skyrockets, bottlenecks are shifting away from compute capacity toward networking—the ability to move vast volumes of data seamlessly between servers.

To address this, Nvidia has been investing heavily in InfiniBand networking technology and high-performance Ethernet solutions, acquired through its $6.9 billion purchase of Mellanox in 2020. These technologies allow data centers to connect thousands of GPUs together in tightly integrated “AI factories,” reducing latency and maximizing throughput.

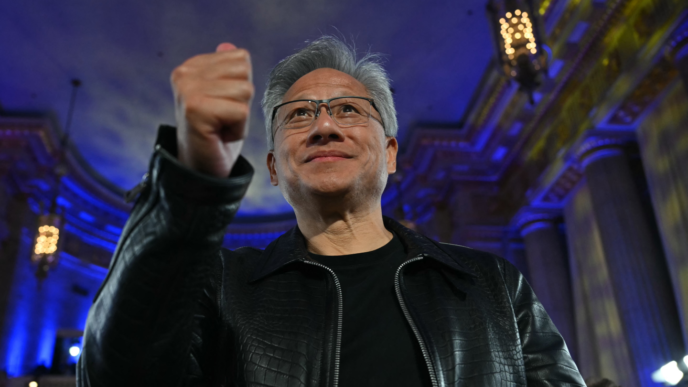

“AI compute isn’t just about chips—it’s about making every part of the system faster and more efficient,” said Nvidia CEO Jensen Huang earlier this year. “The data center is the new computer, and networking is its nervous system.”

Riding the Data Center Boom

Global spending on data centers is expected to soar as demand for AI services expands, but the scale and shape of that expansion remain uncertain. Some analysts warn that proposed mega-campuses—sprawling facilities with hundreds of thousands of GPUs—may face delays due to power shortages, supply chain constraints, and political concerns over resource consumption.

Nvidia’s move into networking helps insulate the company from these risks. Even if mega-campuses are slow to appear, smaller and mid-sized enterprises still require advanced data center infrastructure. Whether it’s a hyperscale giant like Microsoft or a regional cloud provider in Asia, all need the networking backbone Nvidia supplies.

Building an Ecosystem Lock-In

Nvidia’s networking products don’t just complement its GPUs—they reinforce them. By offering tightly coupled solutions that integrate compute, storage, and networking, Nvidia makes it harder for customers to switch to competitors.

For example:

- InfiniBand: Provides ultra-low latency, ensuring that thousands of GPUs work as one.

- BlueField DPUs (Data Processing Units): Offload tasks like security and networking, freeing GPUs for AI workloads.

- CUDA software ecosystem: Ensures applications run seamlessly across Nvidia-powered hardware.

The result is an ecosystem where chips, software, and networking interlock—a “full-stack” dominance that competitors like AMD and Intel have struggled to replicate.

Market Implications

Nvidia’s networking revenue is still dwarfed by its GPU business, but it is one of the fastest-growing segments. Analysts predict that networking could account for up to 20% of Nvidia’s total revenue within the next five years, with demand driven by both AI and cloud computing.

Crucially, this diversification means Nvidia is less exposed to the boom-and-bust cycles of chip demand. Even if AI spending slows, the trend toward more connected, data-intensive computing is unlikely to reverse.

Competition on the Horizon

Nvidia is not alone in seeing networking as the next frontier. Companies like Broadcom, Cisco, and Arista Networks are all vying to dominate AI data center connectivity. Still, Nvidia’s integration of networking with its core GPU products gives it an advantage: customers looking to build cutting-edge AI clusters often see Nvidia’s “one-stop shop” approach as more efficient.

That said, regulators are watching closely. Nvidia’s grip on the AI hardware supply chain has already raised antitrust concerns in the U.S. and Europe. A deeper expansion into networking could trigger fresh scrutiny.

The Long Game

For Nvidia, the message is clear: the AI chip boom may have made it the most valuable company in the world, but it is the broader data center ecosystem that will keep it there. Whether mega-AI campuses rise as planned or not, Nvidia’s bet on networking ensures it has a stake in every layer of the digital infrastructure.

As one analyst put it: “If GPUs are the brains of AI, networking is the bloodstream. Nvidia doesn’t just want to sell brains—it wants to own the whole body.”